The first thing I have learned as a data scientist is that feature selection is one of the most important steps of a machine learning pipeline. Fortunately, some models may help us accomplish this goal by giving us their own interpretation of feature importance. One of such models is the Lasso regression.

What is Lasso regression?

I have already talked about Lasso regression in a previous blog post. Let me summarize the main properties of such a model. It is a linear model that uses this cost function:

\frac{1}{2 N_{training}} \sum_{i = 1}^{N_{training}} \left(y_{real}^{(i)}-y_{pred}^{(i)}\right)^2 + \alpha \sum_{j = 1}^n |a_j|aj is the coefficient of the j-th feature. The final term is called l1 penalty and α is a hyperparameter that tunes the intensity of this penalty term. The higher the coefficient of a feature, the higher the value of the cost function. So, the idea of Lasso regression is to optimize the cost function reducing the absolute values of the coefficients. Obviously, this works if the features have been previously scaled, for example using standardization or other scaling techniques. α hyperparameter value must be found using a cross-validation approach.

You can find an extensive explanation of Lasso regression in my “Supervised Machine Learning with Python” online course. In this course, I cover several machine learning models and how to get the highest value from them.

How can we use it for feature selection?

Trying to minimize the cost function, Lasso regression will automatically select those features that are useful, discarding the useless or redundant features. In Lasso regression, discarding a feature will make its coefficient equal to 0.

So, the idea of using Lasso regression for feature selection purposes is very simple: we fit a Lasso regression on a scaled version of our dataset and we consider only those features that have a coefficient different from 0. Obviously, we first need to tune α hyperparameter in order to have the right kind of Lasso regression.

That’s pretty easy and will make us easily detect the useful features and discard the useless features.

Let’s see how to do it in Python.

Example in Python

In this example, I’m going to show you how to use Lasso for feature selection in Python using the diabetes dataset. You can find the whole code in my GitHub repository.

First, let’s import some libraries:

import numpy as np

from sklearn.preprocessing import StandardScaler

from sklearn.pipeline import Pipeline

from sklearn.model_selection import train_test_split, GridSearchCV

from sklearn.linear_model import LassoThen, we can import our dataset and the names of the features.

from sklearn.datasets import load_diabetes

X,y = load_diabetes(return_X_y=True)

features = load_diabetes()['feature_names']As always, we can now split our dataset into training and test sets and perform all the calculation on the training set only.

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.33, random_state=42)Now we have to build our model, optimize its hyperparameter and train it on the training dataset.

Since our dataset needs to be scaled in advance, we can make use of the powerful Pipeline object in scikit-learn. Our pipeline is made by a StandardScaler and the Lasso object itself.

pipeline = Pipeline([

('scaler',StandardScaler()),

('model',Lasso())

])Now we have to optimize the α hyperparameter of Lasso regression. For this example, we are going to test several values from 0.1 to 10 with 0.1 step. For each value, we calculate the average value of the mean squared error in a 5-folds cross-validation and select the value of α that minimizes such average performance metrics. We can use the GridSearchCV object for this purpose.

search = GridSearchCV(pipeline,

{'model__alpha':np.arange(0.1,10,0.1)},

cv = 5, scoring="neg_mean_squared_error",verbose=3

)We use neg_mean_squared_error because the grid search tries to maximize the performance metrics, so we add a minus sign to minimize the mean squared error.

We can now fit the grid search.

search.fit(X_train,y_train)The best value for α is:

search.best_params_

# {'model__alpha': 1.2000000000000002}Now, we have to get the values of the coefficients of Lasso regression.

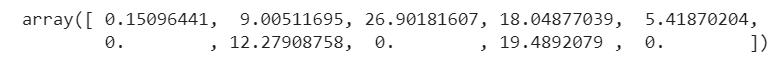

coefficients = search.best_estimator_.named_steps['model'].coef_The importance of a feature is the absolute value of its coefficient, so:

importance = np.abs(coefficients)Let’s take a look at the importances:

As we can see, there are 3 features with 0 importance. Those features have been discarded by our model.

The features that survived the Lasso regression are:

np.array(features)[importance > 0]

# array(['age', 'sex', 'bmi', 'bp', 's1', 's3', 's5'], dtype='<U3')While the 3 discarded features are:

np.array(features)[importance == 0]

# array(['s2', 's4', 's6'], dtype='<U3')In this way, we have used a properly optimized Lasso regression to get an information about the most important features of our dataset according to the given target variable.

Conclusions

Lasso regression has a very powerful built-in feature selection capability that can be used in several situations. However, it has some drawbacks as well. For example, if the relationship between the features and the target variable is not linear, using a linear model might not be a good idea. As usual, a proper Exploratory Data Analysis can help us better understand the most important relationships between the features and the target, making us select the best model.

Great article! How can i plot the result?

You can use a bar plot. The labels are the feature names and the height of the bar is the absolute value of its coefficient according to Lasso regression.